DiVincenzo's criteria

The DiVincenzo criteria is a list of conditions that are necessary for constructing a quantum computer proposed by the theoretical physicist David P. DiVincenzo in his 2000 paper "The Physical Implementation of Quantum Computation".[1] Quantum computation was first proposed by Richard Feynman[2] (1982) as a means to efficiently simulate quantum systems. There have been many proposals of how to construct a quantum computer, all of which have varying degrees of success against the different challenges of constructing quantum devices. Some of these proposals involve using superconducting qubits, trapped ions, liquid and solid state nuclear magnetic resonance or optical cluster states all of which have remarkable prospects, however, they all have issues that prevent practical implementation. The DiVincenzo criteria are a list of conditions that are necessary for constructing the quantum computer as proposed by Feynman.

The DiVincenzo criteria consist of 5+2 conditions that an experimental setup must satisfy in order to successfully implement quantum algorithms such as Grover's search algorithm or Shor factorisation. The 2 additional conditions are necessary in order to implement quantum communication such as that used in quantum key distribution. One can consider DiVincenzo's criteria for a classical computer and demonstrate that these are satisfied. Comparing each statement between the classical and quantum regimes highlights both the complications that arise in dealing with quantum systems and the source of the quantum speed up.

Statement of the criteria

In order to construct a quantum computer the following conditions must be met by the experimental setup. The first five are necessary for quantum computation and the remaining two are necessary for quantum communication.

- A scalable physical system with well characterised qubits.

- The ability to initialise the state of the qubits to a simple fiducial state.

- Long relevant decoherence times.

- A “universal” set of quantum gates.

- A qubit-specific measurement capability.

- The ability to interconvert stationary and flying qubits.

- The ability to faithfully transmit flying qubits between specified locations.

Why the DiVincenzo criteria?

Divincenzo's criteria was proposed after many attempts of constructing a quantum computer. Below we state why these statements are important and present examples to highlight these facts.

Scalable with well characterised qubits

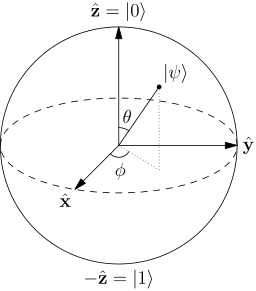

Most models of quantum computation require the use of qubits for computation. Some models use qudits and in this case the first statement is logically extended. Quantum mechanically a qubit is defined as 2 level system with some energy gap. This can sometimes be difficult to implement physically and so we can focus on a particular transition of atomic levels, etc. Whatever the system we choose, we require that the system remain (almost) always in the subspace of these two levels and in doing so we can say it is a well characterised qubit. An example of a system that is not well characterised would be 2 one-electron quantum dots (potential wells where we only allow for single electron occupations). The electron being in one well or the other is properly characterised as a single qubit, however if we consider a state such as then this would correspond to a two qubit state. Such a state is not physically allowed (we only permit single electron occupation) and so we cannot say that we have 2 well characterised qubits.

With today's technology it is simple to create a system that has a well characterised qubit, however it is a challenge to create a system that has an arbitrary number of well characterised qubits. Currently one of the biggest problems being faced is that we require exponentially larger experimental setups in order to accommodate greater number of qubits. The quantum computer is capable of exponential speed ups on current classical algorithms for prime factorisation of numbers, however if this requires an exponentially large setup then our advantage is lost. In the case of liquid state nuclear magnetic resonance[3] it was found that the macroscopic size of the system caused the computational 'qubits' to be initialised in a highly mixed state. In spite of this a computation model was found that could still use these mixed states for computation, however the more mixed these states are the weaker the induction signal corresponding to a quantum measurement. If this signal is below the noise level a solution is to increase the size of the sample to boost the signal strength, and this is the source of the non-scalability of liquid state NMR as a means for quantum computation. One could say that as the number of computational qubits increases they become less well characterised until we reach a threshold in which they are no longer useful.

The ability to initialise the state of the qubits to a simple fiducial state

All models of quantum computation (and classical computation) are based on performing some operations on a state (qubit or bit) and finally measuring/reading out a result, a procedure that is dependent on the initial state of the system. In particular, the unitary nature of quantum mechanics makes initialisation of the qubits extremely important. In many cases the approach to initialise a state is to let the system anneal into the ground state and then we can start the computation. This is of particular importance when you consider Quantum Error Correction, a procedure to perform quantum processes that are robust to certain types of noise, that requires a large supply of fresh initialised qubits. This places restrictions on how fast the initialisation needs to be. An example of annealing is described in Petta et al.[4] (2005) where a Bell pair of electrons is prepared in quantum dots. This procedure relies on T1 to anneal the system and the paper is dedicated to measuring the T2 relaxation time of the quantum dot system. This quantum dot system gives an idea of the timescales involved in initialising a system by annealing (~milliseconds) and this would become a fundamental issue given that the decoherence time is shorter than the initialisation time. Alternative approaches (usually involving optical pumping[5]) have been developed to reduce the initialisation time and improve the fidelity of the procedure.

Long relevant decoherence times

The emergence classicality in large quantum systems comes about from the increased decoherence experienced by macroscopic systems. The timescale associated with this loss of quantum behaviour then becomes important when constructing large quantum computation systems. The quantum resources used by quantum computing models (superposition and/or entanglement) are quickly destroyed by decoherence and so long decoherence times are desired, much longer than the average gate time so that we can combat decoherence with error correction and/or dynamical decoupling. In solid state NMR using NV centres the orbital electron experiences short decoherence times making computations problematic. The proposed solution has been to encode the qubit into the nuclear spin of the nitrogen atom and this increases the decoherence time. In other systems such as the quantum dot we have issues with strong environmental effects limiting the T2 decoherence time. One of the problems in satisfying this criteria is that systems that can be manipulated quickly (through strong interactions) tend to experience decoherence via these very same strong interactions and so there is a trade-off between ability to implement control and increased decoherence.

A “universal” set of quantum gates

Both in classical computing and quantum computing the algorithms that we are permitted to implement are restricted by the gates we are capable of implementing on the state. In the case of quantum computing we can construct a universal quantum computer (a quantum Turing machine) with a very small set of 1 and 2 qubit gates. Any experimental setup that manages to have well characterised qubits, quick faithful initialisation and long decoherence times must also be capable of influencing the Hamiltonian of the system in order to implement coherent changes capable of implementing the universal set of gates. Perfect implementation of gates is not always necessary as gate sequences can be created that are more robust to certain systematic and random noise models.[6] Liquid state NMR was one of the first setups capable of implementing a universal set of gates through the use of precise timing and magnetic field pulses, however as mentioned above this system was not scalable.

A qubit-specific measurement capability

For any process applied to a quantum state of qubits the final measurement is of fundamental importance when performing computations. If our system allows for non-demolition projective measurements then we can in principle use this for state preparation. Measurement is at the corner of all quantum algorithms, especially in concepts such as teleportation. Note that some measurement techniques are not 100% efficient and so these tend to be corrected by repeating the experiment in order to increase the success rate. Examples of reliable measurement devices are found in optical systems where homodyne detectors have reached the point of reliably counting how many photons have passed through the detecting cross-section. For more challenging measurement systems we can look at quantum dots in Petta et al.[4] (2005) where they use the energy gap between the and to measure the relative spins of the 2 electrons.

The ability to interconvert stationary and flying qubits and the ability to faithfully transmit flying qubits between specified locations

These two conditions are only necessary when considering quantum communication protocols such as quantum key distribution that involve exchange of coherent quantum states or exchange of entangled qubits (for example the BB84 protocol). When creating pairs of entangled qubits in some experimental set up usually these qubits are 'stationary' and cannot be moved from the laboratory. If these qubits can be teleported to flying qubits such as encoded into the polarisation of a photon then we can consider sending entangled photons to a third party and having them extract that information, leaving two entangled stationary qubits at two different locations. The ability to transmit the flying qubit without decoherence is major problem. Currently at the Institute for Quantum Computing there are efforts to produce a pair of entangled photons and transmit one of the photons to some other part of the world by reflecting off of a satellite. The main issue now is the decoherence the photon experiences whilst interacting with particles in the atmosphere. Similarly some attempts have been made to use optical fibres, however the attenuation of the signal has stopped this from being a reality.

Acknowledgements

This article is based principally on DiVincenzo's 2000[1] paper and lectures from the Perimeter Institute for Theoretical Physics.

See also

References

- 1 2 DiVincenzo, David P. (2000-04-13). "The Physical Implementation of Quantum Computation". arXiv:quant-ph/0002077

[quant-ph].

[quant-ph]. - ↑ Feynman, R. P. (June 1982). "Simulating physics with computers". International Journal of Theoretical Physics. 21 (6): 467–488. doi:10.1007/BF02650179.

- ↑ Menicucci NC, Caves CM (2002). "Local realistic model for the dynamics of bulk-ensemble NMR information processing". Physical Review Letters. 88 (16). arXiv:quant-ph/0111152

. Bibcode:2002PhRvL..88p7901M. doi:10.1103/PhysRevLett.88.167901.

. Bibcode:2002PhRvL..88p7901M. doi:10.1103/PhysRevLett.88.167901. - 1 2 Petta, J. R.; Johnson, A. C.; Taylor, J. M.; Laird, E. A.; Yacoby, A.; Lukin, M. D.; Marcus, C. M.; Hanson, M. P.; Gossard, A. C. (September 2005). "Coherent Manipulation of Coupled Electron Spins in Semiconductor Quantum Dots". Science. 309 (5744): 2180–2184. doi:10.1126/science.1116955.

- ↑ Atatüre, Mete; Dreiser, Jan; Badolato, Antonio; Högele, Alexander; Karrai, Khaled; Imamoglu, Atac (April 2006). "Quantum-Dot Spin-State Preparation with Near-Unity Fidelity". Science. 312 (5773): 551–553. doi:10.1126/science.1126074.

- ↑ Green, Todd J.; Sastrawan, Jarrah; Uys, Hermann; Biercuk, Michael J. (September 2013). "Arbitrary quantum control of qubits in the presence of universal noise". New Journal of Physics. 15 (9): 095004. doi:10.1088/1367-2630/15/9/095004.