Semiparametric regression

| Part of a series on Statistics |

| Regression analysis |

|---|

|

| Models |

| Estimation |

| Background |

|

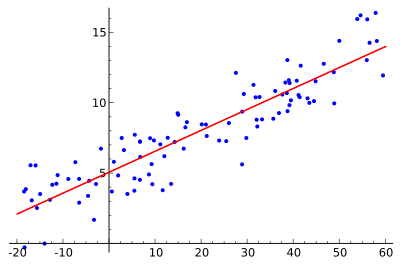

In statistics, semiparametric regression includes regression models that combine parametric and nonparametric models. They are often used in situations where the fully nonparametric model may not perform well or when the researcher wants to use a parametric model but the functional form with respect to a subset of the regressors or the density of the errors is not known. Semiparametric regression models are a particular type of semiparametric modelling and, since semiparametric models contain a parametric component, they rely on parametric assumptions and may be misspecified and inconsistent, just like a fully parametric model.

Methods

Many different semiparametric regression methods have been proposed and developed. The most popular methods are the partially linear, index and varying coefficient models.

Partially linear models

A partially linear model is given by

where is the dependent variable, and are vectors of explanatory variables, is a vector of unknown parameters and . The parametric part of the partially linear model is given by the parameter vector while the nonparametric part is the unknown function . The data is assumed to be i.i.d. with and the model allows for a conditionally heteroskedastic error process of unknown form. This type of model was proposed by Robinson (1988) and extended to handle categorical covariates by Racine and Liu (2007).

This method is implemented by obtaining a consistent estimator of and then deriving an estimator of from the nonparametric regression of on using an appropriate nonparametric regression method.[1]

Index models

A single index model takes the form

where , and are defined as earlier and the error term satisfies . The single index model takes its name from the parametric part of the model which is a scalar single index. The nonparametric part is the unknown function .

Ichimura's method

The single index model method developed by Ichimura (1993) is as follows. Consider the situation in which is continuous. Given a known form for the function , could be estimated using the nonlinear least squares method to minimize the function

Since the functional form of is not known, we need to estimate it. For a given value for an estimate of the function

using kernel method. Ichimura (1993) proposes estimating with

the leave-one-out nonparametric kernel estimator of .

Klein and Spady's estimator

If the dependent variable is binary and and are assumed to be independent, Klein and Spady (1993) propose a technique for estimating using maximum likelihood methods. The log-likelihood function is given by

where is the leave-one-out estimator.

Smooth coefficient/varying coefficient models

Hastie and Tibshirani (1993) propose a smooth coefficient model given by

where is a vector and is a vector of unspecified smooth functions of .

may be expressed as

See also

- Nonparametric regression

- Effective degree of freedom

Notes

- ↑ See Li and Racine (2007) for an in-depth look at nonparametric regression methods.

References

- Robinson, P.M. (1988). "Root-n Consistent Semiparametric Regression". Econometrica. The Econometric Society. 56 (4): 931–954. doi:10.2307/1912705. JSTOR 1912705.

- Li, Qi; Racine, Jeffrey S. (2007). Nonparametric Econometrics: Theory and Practice. Princeton University Press. ISBN 0-691-12161-3.

- Racine, J.S.; Qui, L. (2007). "A Partially Linear Kernel Estimator for Categorical Data". Unpublished Manuscript, Mcmaster University.

- Ichimura, H. (1993). "Semiparametric Least Squares (SLS) and Weighted SLS Estimation of Single Index Models". Journal of Econometrics. 58: 71–120. doi:10.1016/0304-4076(93)90114-K.

- Klein, R. W.; R. H. Spady (1993). "An Efficient Semiparametric Estimator for Binary Response Models". Econometrica. The Econometric Society. 61 (2): 387–421. doi:10.2307/2951556. JSTOR 2951556.

- Hastie, T.; R. Tibshirani (1993). "Varying-Coefficient Models". Journal of the Royal Statistical Society, Series B. 55: 757–796.