Lucas–Kanade method

In computer vision, the Lucas–Kanade method (pronounced /lukɑs kɑnɑdɪ/) is a widely used differential method for optical flow estimation developed by Bruce D. Lucas and Takeo Kanade. It assumes that the flow is essentially constant in a local neighbourhood of the pixel under consideration, and solves the basic optical flow equations for all the pixels in that neighbourhood, by the least squares criterion.[1][2]

By combining information from several nearby pixels, the Lucas–Kanade method can often resolve the inherent ambiguity of the optical flow equation. It is also less sensitive to image noise than point-wise methods. On the other hand, since it is a purely local method, it cannot provide flow information in the interior of uniform regions of the image.

Concept

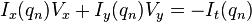

The Lucas–Kanade method assumes that the displacement of the image contents between two nearby instants (frames) is small and approximately constant within a neighborhood of the point p under consideration. Thus the optical flow equation can be assumed to hold for all pixels within a window centered at p. Namely, the local image flow (velocity) vector  must satisfy

must satisfy

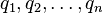

where  are the pixels inside the window, and

are the pixels inside the window, and  are the partial derivatives of the image

are the partial derivatives of the image  with respect to position x, y and time t, evaluated at the point

with respect to position x, y and time t, evaluated at the point  and at the current time.

and at the current time.

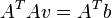

These equations can be written in matrix form  , where

, where

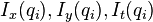

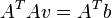

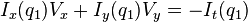

This system has more equations than unknowns and thus it is usually over-determined. The Lucas–Kanade method obtains a compromise solution by the least squares principle. Namely, it solves the 2×2 system

or

or

where  is the transpose of matrix

is the transpose of matrix  . That is, it computes

. That is, it computes

where the central matrix in the equation is an Inverse matrix. The sums are running from i=1 to n.

The matrix  is often called the structure tensor of the image at the point p.

is often called the structure tensor of the image at the point p.

Weighted window

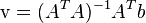

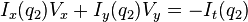

The plain least squares solution above gives the same importance to all n pixels  in the window. In practice it is usually better to give more weight to the pixels that are closer to the central pixel p. For that, one uses the weighted version of the least squares equation,

in the window. In practice it is usually better to give more weight to the pixels that are closer to the central pixel p. For that, one uses the weighted version of the least squares equation,

or

where  is an n×n diagonal matrix containing the weights

is an n×n diagonal matrix containing the weights  to be assigned to the equation of pixel

to be assigned to the equation of pixel  . That is, it computes

. That is, it computes

The weight  is usually set to a Gaussian function of the distance between

is usually set to a Gaussian function of the distance between  and p.

and p.

Use conditions and techniques

In order for equation  to be solvable,

to be solvable,  should be invertible, or

should be invertible, or  's eigenvalues satisfy

's eigenvalues satisfy  . To avoid noise issue, usually

. To avoid noise issue, usually  is required not too small. Also, if

is required not too small. Also, if  is too large, this means the point p is on an edge, and this method suffers from the aperture problem. So for this method to work properly, the condition is

is too large, this means the point p is on an edge, and this method suffers from the aperture problem. So for this method to work properly, the condition is  and

and  are large enough and have similar magnitude. This condition is also the one for Corner detection. This observation shows that one can easily tell which pixel is suitable for Lucas–Kanade method to work on by inspecting a single image.

are large enough and have similar magnitude. This condition is also the one for Corner detection. This observation shows that one can easily tell which pixel is suitable for Lucas–Kanade method to work on by inspecting a single image.

One main assumption for this method is that the motion is small (less than 1 pixel between two images for example). If the motion is large and violates this assumption, one technique is to reduce the resolution of images first and then apply the Lucas-Kanade method. [3]

Improvements and extensions

The least-squares approach implicitly assumes that the errors in the image data have a Gaussian distribution with zero mean. If one expects the window to contain a certain percentage of "outliers" (grossly wrong data values, that do not follow the "ordinary" Gaussian error distribution), one may use statistical analysis to detect them, and reduce their weight accordingly.

The Lucas–Kanade method per se can be used only when the image flow vector  between the two frames is small enough for the differential equation of the optical flow to hold, which is often less than the pixel spacing. When the flow vector may exceed this limit, such as in stereo matching or warped document registration, the Lucas–Kanade method may still be used to refine some coarse estimate of the same, obtained by other means; for example, by extrapolating the flow vectors computed for previous frames, or by running the Lucas-Kanade algorithm on reduced-scale versions of the images. Indeed, the latter method is the basis of the popular Kanade-Lucas-Tomasi (KLT) feature matching algorithm.

between the two frames is small enough for the differential equation of the optical flow to hold, which is often less than the pixel spacing. When the flow vector may exceed this limit, such as in stereo matching or warped document registration, the Lucas–Kanade method may still be used to refine some coarse estimate of the same, obtained by other means; for example, by extrapolating the flow vectors computed for previous frames, or by running the Lucas-Kanade algorithm on reduced-scale versions of the images. Indeed, the latter method is the basis of the popular Kanade-Lucas-Tomasi (KLT) feature matching algorithm.

A similar technique can be used to compute differential affine deformations of the image contents.

See also

- Optical flow

- Horn–Schunck method

- The Shi and Tomasi corner detection algorithm

- Kanade–Lucas–Tomasi feature tracker

References

- ↑ B. D. Lucas and T. Kanade (1981), An iterative image registration technique with an application to stereo vision. Proceedings of Imaging Understanding Workshop, pages 121--130

- ↑ Bruce D. Lucas (1984) Generalized Image Matching by the Method of Differences (doctoral dissertation)

- ↑ J. Y. Bouguet, (2001) . Pyramidal implementation of the affine lucas kanade feature tracker description of the algorithm. Intel Corporation, 5.

External links

- The image stabilizer plugin for ImageJ based on the Lucas–Kanade method

- Mathworks Lucas-Kanade Matlab implementation of inverse and normal affine Lucas-Kanade

- FolkiGPU : GPU implementation of an iterative Lucas-Kanade based optical flow

- KLT: An Implementation of the Kanade–Lucas–Tomasi Feature Tracker

- Takeo Kanade

- C++ example using the Lucas-Kanade optical flow algorithm

- Python example using the Lucas-Kanade optical flow algorithm

- Python example using the Lucas-Kanade tracker for homography matching

- MATLAB quick example of Lucas-Kanade method to show optical flow field

- MATLAB quick example of Lucas-Kanade method to show velocity vector of objects

![A = \begin{bmatrix}

I_x(q_1) & I_y(q_1) \\[10pt]

I_x(q_2) & I_y(q_2) \\[10pt]

\vdots & \vdots \\[10pt]

I_x(q_n) & I_y(q_n)

\end{bmatrix},

\quad\quad

v =

\begin{bmatrix}

V_x\\[10pt]

V_y

\end{bmatrix},

\quad \mbox{and}\quad

b =

\begin{bmatrix}

-I_t(q_1) \\[10pt]

-I_t(q_2) \\[10pt]

\vdots \\[10pt]

-I_t(q_n)

\end{bmatrix}](../I/m/33ec519f1943a440783c93b4ac98b4a4.png)

![\begin{bmatrix}

V_x\\[10pt]

V_y

\end{bmatrix}

=

\begin{bmatrix}

\sum_i I_x(q_i)^2 & \sum_i I_x(q_i)I_y(q_i) \\[10pt]

\sum_i I_y(q_i)I_x(q_i) & \sum_i I_y(q_i)^2

\end{bmatrix}^{-1}

\begin{bmatrix}

-\sum_i I_x(q_i)I_t(q_i) \\[10pt]

-\sum_i I_y(q_i)I_t(q_i)

\end{bmatrix}](../I/m/76131d763130942b287208d7378bcf5f.png)

![\begin{bmatrix}

V_x\\[10pt]

V_y

\end{bmatrix}

=

\begin{bmatrix}

\sum_i w_i I_x(q_i)^2 & \sum_i w_i I_x(q_i)I_y(q_i) \\[10pt]

\sum_i w_i I_x(q_i)I_y(q_i) & \sum_i w_i I_y(q_i)^2

\end{bmatrix}^{-1}

\begin{bmatrix}

-\sum_i w_i I_x(q_i)I_t(q_i) \\[10pt]

-\sum_i w_i I_y(q_i)I_t(q_i)

\end{bmatrix}](../I/m/553c95e817bc4b32f43b2e883aede3b1.png)