Laws of robotics

| Laws of robotics |

|---|

|

Three Laws of Robotics by Isaac Asimov (in culture) |

| Related topics |

Laws of Robotics are a set of laws, rules, or principles, which are intended as a fundamental framework to underpin the behavior of robots designed to have a degree of autonomy. Robots of this degree of complexity do not yet exist, but they have been widely anticipated in science fiction, films and are a topic of active research and development in the fields of robotics and artificial intelligence.

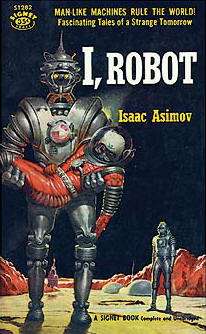

The best known set of laws are those written by Isaac Asimov in the 1940s, or based upon them, but other sets of laws have been proposed by researchers in the decades since then.

Isaac Asimov's "Three Laws of Robotics"

The best known set of laws are Isaac Asimov's "Three Laws of Robotics". These were introduced in his 1942 short story "Runaround", although they were foreshadowed in a few earlier stories. The Three Laws are:

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey the orders given it by human beings, except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws.

Near the end of his book Foundation and Earth, a zeroth law was introduced:

- 0. A robot may not injure humanity, or, by inaction, allow humanity to come to harm.

Adaptations and extensions exist based upon this framework. As of 2011 they remain a "fictional device".[1]

EPSRC / AHRC principles of robotics

In 2011, the Engineering and Physical Sciences Research Council (EPSRC) and the Arts and Humanities Research Council (AHRC) of Great Britain jointly published a set of five ethical "principles for designers, builders and users of robots" in the real world, along with seven "high-level messages" intended to be conveyed, based on a September 2010 research workshop:[2][3][1]

- Robots should not be designed solely or primarily to kill or harm humans.

- Humans, not robots, are responsible agents. Robots are tools designed to achieve human goals.

- Robots should be designed in ways that assure their safety and security.

- Robots are artifacts; they should not be designed to exploit vulnerable users by evoking an emotional response or dependency. It should always be possible to tell a robot from a human.

- It should always be possible to find out who is legally responsible for a robot.

The messages intended to be conveyed were:

- We believe robots have the potential to provide immense positive impact to society. We want to encourage responsible robot research.

- Bad practice hurts us all.

- Addressing obvious public concerns will help us all make progress.

- It is important to demonstrate that we, as roboticists, are committed to the best possible standards of practice.

- To understand the context and consequences of our research, we should work with experts from other disciplines, including: social sciences, law, philosophy and the arts.

- We should consider the ethics of transparency: are there limits to what should be openly available?

- When we see erroneous accounts in the press, we commit to take the time to contact the reporting journalists.

Judicial development

Another comprehensive terminological codification for the legal assessment of the technological developments in the robotics industry has already begun mainly in Asian countries.[4] This progress represents a contemporary reinterpretation of the law (and ethics) in the field of robotics, an interpretation that assumes a rethinking of traditional legal constellations. These include primarily legal liability issues in civil and criminal law.

Satya Nadella's laws

In June 2016, Satya Nadella, a CEO of Microsoft Corporation at the time, had an interview with the Slate magazine and roughly sketched five rules for artificial intelligences to be observed by their designers:[5][6]

- "A.I. must be designed to assist humanity" meaning human autonomy needs to be respected.

- "A.I. must be transparent" meaning that humans should know and be able to understand how they work.

- "A.I. must maximize efficiencies without destroying the dignity of people".

- "A.I. must be designed for intelligent privacy" meaning that it earns trust through guarding their information.

- "A.I. must have algorithmic accountability so that humans can undo unintended harm".

- "A.I. must guard against bias" so that they must not discriminate people.

Tilden's "Laws of Robotics"

Mark W. Tilden proposed three guiding principles/rules for robots, which do not pertain to humans or humanity, but to robots themselves:

- A robot must protect its existence at all costs.

- A robot must obtain and maintain access to its own power source.

- A robot must continually search for better power sources.

See also

References

- 1 2 Stewart, Jon (2011-10-03). "Ready for the robot revolution?". BBC News. Retrieved 2011-10-03.

- ↑ "Principles of robotics: Regulating Robots in the Real World". Engineering and Physical Sciences Research Council. Retrieved 2011-10-03.

- ↑ Winfield, Alan. "Five roboethical principles – for humans". New Scientist. Retrieved 2011-10-03.

- ↑ bcc.co.uk: Robot age poses ethical dilemma. Link

- ↑ Nadella, Satya (2016-06-28). "The Partnership of the Future". Slate. ISSN 1091-2339. Retrieved 2016-06-30.

- ↑ Vincent, James (2016-06-29). "Satya Nadella's rules for AI are more boring (and relevant) than Asimov's Three Laws". The Verge. Vox Media. Retrieved 2016-06-30.