IOPS

Input/output operations per second (IOPS, pronounced eye-ops) is a performance measurement used to characterize computer storage devices like hard disk drives (HDD), solid state drives (SSD), and storage area networks (SAN). Frequently mischaracterized as a 'benchmark', IOPS numbers published by storage device manufacturers do not relate to real-world application performance.[1][2]

Background

To meaningfully describe the performance characteristics of any storage device, it is necessary to specify a minimum of three metrics simultaneously: IOPS, response time, and (application) workload. Absent simultaneous specifications of response-time and workload, IOPS are essentially meaningless. In isolation, IOPS can be considered analogous to "revolutions per minute" of an automobile engine i.e. an engine capable of spinning at 10,000 RPMs with its transmission in neutral does not convey anything of value, however an engine capable of developing specified torque and horsepower at a given number of RPMs fully describes the capabilities of the engine.

In 1999, recognizing the confusion created by industry abuse of IOPS numbers following the release of IOmeter, the Storage Performance Council developed an industry-standard, peer-reviewed and audited benchmark that has been widely recognized as the only meaningful measurement of storage device IO performance; the SPC-1 benchmark suite. The SPC-1 requires storage vendors to fully characterize their products against a standardized workload closely modeled on 'real-world' applications, reporting both IOPS and response-times and with explicit prohibitions and safeguards against 'cheating' and 'benchmark specials'. As such, an SPC-1 benchmark result provides users with complete information about IOPS, response-times, sustainability of performance over time and data integrity checks. Moreover, SPC-1 audit rules require vendors to submit a complete bill-of-materials including pricing of all components used in the benchmark, to facilitate SPC-1 "Cost-per-IOPS" comparisons among vendor submissions.

Among the single-dimension IOPS tools created explicitly by and for benchmarketers, applications, such as Iometer (originally developed by Intel), as well as IOzone and FIO[3] have frequently been used to grossly exaggerate IOPS. Notable examples include Sun (now Oracle) promoting its F5100 Flash array purportedly capable of delivering "1 million IOPS in 1 RU" (Rack Unit). Subsequently tested on the SPC-1, the same storage device was only capable of delivering 30% of the IOmeter value on the SPC-1.

http://storagemojo.com/2009/10/12/1-million-iops-in-1-ru/ http://www.storageperformance.org/benchmark_results_files/SPC-1C/Oracle/C00010_Sun_F5100/c00010_Oracle_Sun-F5100-Flash_SPC1C_executive-summary.pdf

The specific number of IOPS possible in any system configuration will vary greatly, depending upon the variables the tester enters into the program, including the balance of read and write operations, the mix of sequential and random access patterns, the number of worker threads and queue depth, as well as the data block sizes.[1] There are other factors which can also affect the IOPS results including the system setup, storage drivers, OS background operations, etc. Also, when testing SSDs in particular, there are preconditioning considerations that must be taken into account.[4]

Performance characteristics

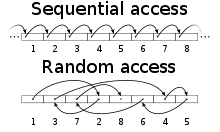

The most common performance characteristics measured are sequential and random operations. Sequential operations access locations on the storage device in a contiguous manner and are generally associated with large data transfer sizes, e.g., 128 KB. Random operations access locations on the storage device in a non-contiguous manner and are generally associated with small data transfer sizes, e.g., 4 KB.

The most common performance characteristics are as follows:

| Measurement | Description |

|---|---|

| Total IOPS | Total number of I/O operations per second (when performing a mix of read and write tests) |

| Random Read IOPS | Average number of random read I/O operations per second |

| Random Write IOPS | Average number of random write I/O operations per second |

| Sequential Read IOPS | Average number of sequential read I/O operations per second |

| Sequential Write IOPS | Average number of sequential write I/O operations per second |

For HDDs and similar electromechanical storage devices, the random IOPS numbers are primarily dependent upon the storage device's random seek time, whereas for SSDs and similar solid state storage devices, the random IOPS numbers are primarily dependent upon the storage device's internal controller and memory interface speeds. On both types of storage devices the sequential IOPS numbers (especially when using a large block size) typically indicate the maximum sustained bandwidth that the storage device can handle.[1] Often sequential IOPS are reported as a simple MB/s number as follows:

(with the answer typically converted to MegabytesPerSec)

Some HDDs will improve in performance as the number of outstanding IO's (i.e. queue depth) increases. This is usually the result of more advanced controller logic on the drive performing command queuing and reordering commonly called either Tagged Command Queuing (TCQ) or Native Command Queuing (NCQ). Most commodity SATA drives either cannot do this, or their implementation is so poor that no performance benefit can be seen. Enterprise class SATA drives, such as the Western Digital Raptor and Seagate Barracuda NL will improve by nearly 100% with deep queues.[5] High-end SCSI drives more commonly found in servers, generally show much greater improvement, with the Seagate Savvio exceeding 400 IOPS—more than doubling its performance.

While traditional HDDs have about the same IOPS for read and write operations, most NAND flash-based SSDs are much slower writing than reading due to the inability to rewrite directly into a previously written location forcing a procedure called garbage collection.[6][7][8] This has caused hardware test sites to start to provide independently measured results when testing IOPS performance.

Newer flash SSD drives such as the Intel X25-E have much higher IOPS than traditional hard disk drives. In a test done by Xssist, using IOmeter, 4 KB random transfers, 70/30 read/write ratio, queue depth 4, the IOPS delivered by the Intel X25-E 64 GB G1 started around 10000 IOPs, and dropped sharply after 8 minutes to 4000 IOPS, and continued to decrease gradually for the next 42 minutes. IOPS vary between 3000 and 4000 from around the 50th minutes onwards for the rest of the 8+ hours test run.[9] Even with the drop in random IOPS after the 50th minute, the X25-E still has much higher IOPS compared to traditional hard disk drives. Some SSDs, including the OCZ RevoDrive 3 x2 PCIe using the SandForce controller, have shown much higher sustained write performance that more closely matches the read speed.[10]

Examples

Mechanical hard drives

Some commonly accepted averages for random IO operations, calculated as 1/(seek + latency) = IOPS:

| Device | Type | IOPS | Interface | Notes |

|---|---|---|---|---|

| 7,200 rpm SATA drives | HDD | ~75-100 IOPS[2] | SATA 3 Gbit/s | |

| 10,000 rpm SATA drives | HDD | ~125-150 IOPS[2] | SATA 3 Gbit/s | |

| 10,000 rpm SAS drives | HDD | ~140 IOPS[2] | SAS | |

| 15,000 rpm SAS drives | HDD | ~175-210 IOPS[2] | SAS | |

Solid-state devices

| Device | Type | IOPS | Interface | Notes |

|---|---|---|---|---|

| Intel X25-M G2 (MLC) | SSD | ~8,600 IOPS[11] | SATA 3 Gbit/s | Intel's data sheet[12] claims 6,600/8,600 IOPS (80 GB/160 GB version) and 35,000 IOPS for random 4 KB writes and reads, respectively. |

| Intel X25-E (SLC) | SSD | ~5,000 IOPS[13] | SATA 3 Gbit/s | Intel's data sheet[14] claims 3,300 IOPS and 35,000 IOPS for writes and reads, respectively. 5,000 IOPS are measured for a mix. Intel X25-E G1 has around 3 times higher IOPS compared to the Intel X25-M G2.[15] |

| G.Skill Phoenix Pro | SSD | ~20,000 IOPS[16] | SATA 3 Gbit/s | SandForce-1200 based SSD drives with enhanced firmware, states up to 50,000 IOPS, but benchmarking shows for this particular drive ~25,000 IOPS for random read and ~15,000 IOPS for random write.[16] |

| OCZ Vertex 3 | SSD | Up to 60,000 IOPS[17] | SATA 6 Gbit/s | Random Write 4 KB (Aligned) |

| Corsair Force Series GT | SSD | Up to 85,000 IOPS[18] | SATA 6 Gbit/s | 240 GB Drive, 555 MB/s sequential read & 525 MB/s sequential write, Random Write 4 KB Test (Aligned) |

| Samsung SSD 850 PRO | SSD | 100,000 read IOPS 90,000 write IOPS[19] |

SATA 6 Gbit/s | 4 KB aligned random I/O at QD32 10,000 read IOPS, 36,000 write IOPS at QD1 550 MB/s sequential read, 520 MB/s sequential write on 256 GB and larger models 550 MB/s sequential read, 470 MB/s sequential write on 128 GB model[19] |

| OCZ Vertex 4 | SSD | Up to 120,000 IOPS[20] | SATA 6 Gbit/s | 256 GB Drive, 560 MB/s sequential read & 510 MB/s sequential write, Random Read 4 KB Test 90K IOPS, Random Write 4 KB Test 85K IOPS |

| (IBM) Texas Memory Systems RamSan-20 | SSD | 120,000+ Random Read/Write IOPS[21] | PCIe | Includes RAM cache |

| Fusion-io ioDrive | SSD | 140,000 Read IOPS, 135,000 Write IOPS[22] | PCIe | |

| Virident Systems tachIOn | SSD | 320,000 sustained READ IOPS using 4KB blocks and 200,000 sustained WRITE IOPS using 4KB blocks[23] | PCIe | |

| OCZ RevoDrive 3 X2 | SSD | 200,000 Random Write 4K IOPS[24] | PCIe | |

| Fusion-io ioDrive Duo | SSD | 250,000+ IOPS[25] | PCIe | |

| Violin Memory Violin 3200 | SSD | 250,000+ Random Read/Write IOPS[26] | PCIe /FC/Infiniband/iSCSI | Flash Memory Array |

| WHIPTAIL, ACCELA | SSD | 250,000/200,000+ Write/Read IOPS[27] | Fibre Channel, iSCSI, Infiniband/SRP, NFS, SMB | Flash Based Storage Array |

| DDRdrive X1, | SSD | 300,000+ (512B Random Read IOPS) and 200,000+ (512B Random Write IOPS)[28][29][30][31] | PCIe | |

| SolidFire SF3010/SF6010 | SSD | 250,000 4KB Read/Write IOPS[32] | iSCSI | Flash Based Storage Array (5RU) |

| Intel 750 1.2 TB | SSD | 440,000 4KB Read IOPS[33] 290,000 4KB Write IOPS | PCIe 3.0 | One of the highest performing in its price class |

| (IBM) Texas Memory Systems RamSan-720 Appliance | FLASH/DRAM | 500,000 Optimal Read, 250,000 Optimal Write 4KB IOPS[36] | FC / InfiniBand | |

| OCZ Single SuperScale Z-Drive R4 PCI-Express SSD | SSD | Up to 500,000 IOPS[37] | PCIe | |

| WHIPTAIL, INVICTA | SSD | 650,000/550,000+ Read/Write IOPS[38] | Fibre Channel, iSCSI, Infiniband/SRP, NFS | Flash Based Storage Array |

| Violin Memory Violin 6000 | 3RU Flash Memory Array | 1,000,000+ Random Read/Write IOPS[39] | /FC/Infiniband/10Gb(iSCSI)/ PCIe | |

| (IBM) Texas Memory Systems RamSan-630 Appliance | Flash/DRAM | 1,000,000+ 4KB Random Read/Write IOPS[40] | FC / InfiniBand | |

| IBM FlashSystem 840 | Flash/DRAM | 1,100,000+ 4KB Random Read/600,000 4KB Write IOPS[41] | 8G FC / 16G FC / 10G FCoE / InfiniBand | Modular 2U Storage Shelf - 4TB-48TB |

| Fusion-io ioDrive Octal (single PCI Express card) | SSD | 1,180,000+ Random Read/Write IOPS[42] | PCIe | |

| OCZ 2x SuperScale Z-Drive R4 PCI-Express SSD | SSD | Up to 1,200,000 IOPS[37] | PCIe | |

| (IBM)Texas Memory Systems RamSan-70 | Flash/DRAM | 1,200,000 Random Read/Write IOPS[43] | PCIe | Includes RAM cache |

| Kaminario K2 | SSD | Up to 2,000,000 IOPS.[44] 1,200,000 IOPS in SPC-1 benchmark simulating business applications[45][46] |

FC | MLC Flash |

| NetApp FAS6240 cluster | Flash/Disk | 1,261,145 SPECsfs2008 nfsv3 IOPs using 1,440 15K disks, across 60 shelves, with virtual storage tiering.[47] | NFS, SMB, FC, FCoE, iSCSI | SPECsfs2008 is the latest version of the Standard Performance Evaluation Corporation benchmark suite measuring file server throughput and response time, providing a standardized method for comparing performance across different vendor platforms. http://www.spec.org/sfs2008. |

| Fusion-io ioDrive2 | SSD | Up to 9,608,000 IOPS[48] | PCIe | Only via demonstration so far. |

| E8 Storage | SSD | Up to 10 million IOPS [49] | 10-100Gb Ethernet | Rack scale flash appliance |

| EMC DSSD D5 | Flash | Up to 10 million IOPS [50] | PCIe Out of Box, up to 48 clients with high availability. | PCIe Rack Scale Flash Appliance |

| Pure Storage M50 | Flash | Up to 220,000 32K IOPS <1ms average latency Up to 7 GB/s bandwidth[51] | 16 Gb/s Fibre Channel 10 Gb/s Ethernet iSCSI 10 Gb/s Replication ports 1 Gb/s Management ports | 3U – 7U 1007 - 1447 Watts (nominal) 95 lbs (43.1 kg) fully loaded + 44 lbs per expansion shelf 5.12” x 18.94” x 29.72” chassis |

See also

References

- 1 2 3 Lowe, Scott (2010-02-12). "Calculate IOPS in a storage array". techrepublic.com. Retrieved 2011-07-03.

- 1 2 3 4 5 "Getting The Hang Of IOPS v1.3". 2012-08-03. Retrieved 2013-08-15.

- ↑ Axboe, Jens. "Flexible IO Tester". Retrieved 2010-06-04.(source available at http://git.kernel.dk/)

- ↑ Smith, Kent (2009-08-11). "Benchmarking SSDs: The Devil is in the Preconditioning Details" (PDF). SandForce.com. Retrieved 2015-05-05.

- ↑ "SATA in the Enterprise - A 500 GB Drive Roundup | StorageReview.com - Storage Reviews". StorageReview.com. 2006-07-13. Retrieved 2013-05-13.

- ↑ Hu, X.-Y. and E. Eleftheriou, R. Haas, I. Iliadis, R. Pletka (2009). "Write Amplification Analysis in Flash-Based Solid State Drives". IBM. CiteSeerX 10.1.1.154.8668

.

. - ↑ "SSDs - Write Amplification, TRIM and GC" (PDF). OCZ Technology. Retrieved 2010-05-31.

- ↑ "Intel Solid State Drives". Intel. Retrieved 2010-05-31.

- ↑ "Intel X25-E 64GB G1, 4KB Random IOPS, iometer benchmark". 2010-03-27. Retrieved 2010-04-01.

- ↑ "OCZ RevoDrive 3 x2 PCIe SSD Review – 1.5GB Read/1.25GB Write/200,000 IOPS As Little As $699". 2011-06-28. Retrieved 2011-06-30.

- ↑ Schmid, Patrick; Roos, Achim (2008-09-08). "Intel's X25-M Solid State Drive Reviewed". Retrieved 2011-08-02.

- ↑ http://download.intel.com/design/flash/nand/mainstream/322296.pdf

- ↑ 1. "Intel's X25-E SSD Walks All Over The Competition : They Did It Again: X25-E For Servers Takes Off". Tomshardware.com. Retrieved 2013-05-13.

- ↑ http://download.intel.com/design/flash/nand/extreme/extreme-sata-ssd-datasheet.pdf

- ↑ "Intel X25-E G1 vs Intel X25-M G2 Random 4 KB IOPS, iometer". May 2010. Retrieved 2010-05-19.

- 1 2 "G.Skill Phoenix Pro 120 GB Test - SandForce SF-1200 SSD mit 50K IOPS - HD Tune Access Time IOPS (Diagramme) (5/12)". Tweakpc.de. Retrieved 2013-05-13.

- ↑ http://www.ocztechnology.com/res/manuals/OCZ_Vertex3_Product_Sheet.pdf

- ↑ Force Series™ GT 240GB SATA 3 6Gb/s Solid-State Hard Drive. "Force Series™ GT 240GB SATA 3 6Gb/s Solid-State Hard Drive - Force Series GT - SSD". Corsair.com. Retrieved 2013-05-13.

- 1 2 "Samsung SSD 850 PRO Specifications". Samsung Electronics. Retrieved 28 December 2014.

- ↑ "OCZ Vertex 4 SSD 2.5" SATA 3 6Gb/s". Ocztechnology.com. Retrieved 2013-05-13.

- ↑ "IBM System Storage - Flash: Overview". Ramsan.com. Retrieved 2013-05-13.

- ↑ "Home - Fusion-io Community Forum". Community.fusionio.com. Retrieved 2013-05-13.

- ↑ "Virident's tachIOn SSD flashes by". theregister.co.uk.

- ↑ "OCZ RevoDrive 3 X2 480GB Review | StorageReview.com - Storage Reviews". StorageReview.com. 2011-06-28. Retrieved 2013-05-13.

- ↑ "Home - Fusion-io Community Forum". Community.fusionio.com. Retrieved 2013-05-13.

- ↑ Archived January 30, 2011, at the Wayback Machine.

- ↑ "Products". Whiptail. Retrieved 2013-05-13.

- ↑ http://www.ddrdrive.com/ddrdrive_press.pdf

- ↑ http://www.ddrdrive.com/ddrdrive_brief.pdf

- ↑ http://www.ddrdrive.com/ddrdrive_bench.pdf

- ↑ Allyn Malventano (2009-05-04). "DDRdrive hits the ground running - PCI-E RAM-based SSD | PC Perspective". Pcper.com. Retrieved 2013-05-13.

- ↑ "SSD Cloud Storage System - Examples & Specifications". SolidFire. Retrieved 2013-05-13.

- ↑ "Intel® SSD 750 Series (1.2TB, 2.5in PCIe 3.0, 20nm, MLC) Specifications". Intel® ARK (Product Specs). Retrieved 2015-11-17.

- ↑ "Intel 750 Series AIC 1.2TB PCI-Express 3.0 x4 MLC Internal Solid State Drive (SSD) SSDPEDMW012T4X1 - Newegg.com". Retrieved 2015-11-17.

- ↑ "Product Review: Samsung SM951 M.2 Drive". Puget Systems. Retrieved 2015-11-17.

- ↑ 8. https://www.ramsan.com/files/download/798

- 1 2 "OCZ Technology Launches Next Generation Z-Drive R4 PCI Express Solid State Storage Systems". OCZ. 2011-08-02. Retrieved 2011-08-02.

- ↑ "Products". Whiptail. Retrieved 2013-05-13.

- ↑ 6000 Series Flash Memory Arrays. "Flash Memory Arrays, Enterprise Flash Storage Violin Memory". Violin-memory.com. Retrieved 2013-11-14.

- ↑ "IBM flash storage and solutions: Overview". Ramsan.com. Retrieved 2013-11-14.

- ↑ "IBM flash storage and solutions: Overview". ibm.com. Retrieved 2014-05-21.

- ↑ "ioDrive Octal". Fusion-io. Retrieved 2013-11-14.

- ↑ "IBM flash storage and solutions: Overview". Ramsan.com. Retrieved 2013-11-14.

- ↑ Lyle Smith. "Kaminario Boasts Over 2 Million IOPS and 20 GB/s Throughput on a Single All-Flash K2 Storage System".

- ↑ Mellor, Chris (2012-07-30). "Chris Mellor, The Register, July 30, 2012: "Million-plus IOPS: Kaminario smashes IBM in DRAM decimation"". Theregister.co.uk. Retrieved 2013-11-14.

- ↑ Storage Performance Council. "Storage Performance Council: Active SPC-1 Results". storageperformance.org.

- ↑ "SpecSFS2008". Retrieved 2014-02-07.

- ↑ "Achieves More Than Nine Million IOPS From a Single ioDrive2". Fusion-io. Retrieved 2013-11-14.

- ↑ "E8 Storage 10 million IOPS claim". TheRegister. Retrieved 2016-02-26.

- ↑ "Rack-Scale Flash Appliance - DSSD D5 EMC". EMC. Retrieved 2016-03-23.

- ↑ "Pure Storage Datasheet" (PDF).

- ↑ Empty citation (help)