Computational sociology

Computational sociology is a branch of sociology that uses computationally intensive methods to analyze and model social phenomena. Using computer simulations, artificial intelligence, complex statistical methods, and analytic approaches like social network analysis, computational sociology develops and tests theories of complex social processes through bottom-up modeling of social interactions.[1]

It involves the understanding of social agents, the interaction among these agents, and the effect of these interactions on the social aggregate.[2] Although the subject matter and methodologies in social science differ from those in natural science or computer science, several of the approaches used in contemporary social simulation originated from fields such as physics and artificial intelligence.[3][4] Some of the approaches that originated in this field have been imported into the natural sciences, such as measures of network centrality from the fields of social network analysis and network science.

In relevant literature, computational sociology is often related to the study of social complexity.[5] Social complexity concepts such as complex systems, non-linear interconnection among macro and micro process, and emergence, have entered the vocabulary of computational sociology.[6] A practical and well-known example is the construction of a computational model in the form of an "artificial society," by which researchers can analyze the structure of a social system.[2][7]

History

Systems theory and structural functionalism

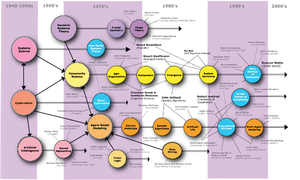

In the post-war era, Vannevar Bush's differential analyser, John von Neumann's cellular automata, Norbert Wiener's cybernetics, and Claude Shannon's information theory became influential paradigms for modeling and understanding complexity in technical systems. In response, scientists in disciplines such as physics, biology, electronics, and economics began to articulate a general theory of systems in which all natural and physical phenomena are manifestations of interrelated elements in a system that has common patterns and properties. Following Émile Durkheim's call to analyze complex modern society sui generis,[8] post-war structural functionalist sociologists such as Talcott Parsons seized upon these theories of systematic and hierarchical interaction among constituent components to attempt to generate grand unified sociological theories, such as the AGIL paradigm.[9] Sociologists such as George Homans argued that sociological theories should be formalized into hierarchical structures of propositions and precise terminology from which other propositions and hypotheses could be derived and operationalized into empirical studies.[10] Because computer algorithms and programs had been used as early as 1956 to test and validate mathematical theorems, such as the four color theorem,[11] social scientists and systems dynamicists anticipated that similar computational approaches could "solve" and "prove" analogously formalized problems and theorems of social structures and dynamics.

Macrosimulation and microsimulation

By the late 1960s and early 1970s, social scientists used increasingly available computing technology to perform macro-simulations of control and feedback processes in organizations, industries, cities, and global populations. These models used differential equations to predict population distributions as holistic functions of other systematic factors such as inventory control, urban traffic, migration, and disease transmission.[12][13] Although simulations of social systems received substantial attention in the mid-1970s after the Club of Rome published reports predicting global environmental catastrophe based upon the predictions of global economy simulations,[14] the inflammatory conclusions also temporarily discredited the nascent field by demonstrating the extent to which results of the models are highly sensitive to the specific quantitative assumptions (backed by little evidence, in the case of the Club of Rome) made about the model's parameters.[2][15] As a result of increasing skepticism about employing computational tools to make predictions about macro-level social and economic behavior, social scientists turned their attention toward micro-simulation models to make forecasts and study policy effects by modeling aggregate changes in state of individual-level entities rather than the changes in distribution at the population level.[16] However, these micro-simulation models did not permit individuals to interact or adapt and were not intended for basic theoretical research.[1]

Cellular automata and agent-based modeling

The 1970s and 1980s were also a time when physicists and mathematicians were attempting to model and analyze how simple component units, such as atoms, give rise to global properties, such as complex material properties at low temperatures, in magnetic materials, and within turbulent flows.[17] Using cellular automata, scientists were able to specify systems consisting of a grid of cells in which each cell only occupied some finite states and changes between states were solely governed by the states of immediate neighbors. Along with advances in artificial intelligence and microcomputer power, these methods contributed to the development of "chaos theory" and "complexity theory" which, in turn, renewed interest in understanding complex physical and social systems across disciplinary boundaries.[2] Research organizations explicitly dedicated to the interdisciplinary study of complexity were also founded in this era: the Santa Fe Institute was established in 1984 by scientists based at Los Alamos National Laboratory and the BACH group at the University of Michigan likewise started in the mid-1980s.

This cellular automata paradigm gave rise to a third wave of social simulation emphasizing agent-based modeling. Like micro-simulations, these models emphasized bottom-up designs but adopted four key assumptions that diverged from microsimulation: autonomy, interdependency, simple rules, and adaptive behavior.[1] Agent-based models are less concerned with predictive accuracy and instead emphasize theoretical development.[18] In 1981, mathematician and political scientist Robert Axelrod and evolutionary biologist W.D. Hamilton published a major paper in Science titled "The Evolution of Cooperation" which used an agent-based modeling approach to demonstrate how social cooperation based upon reciprocity can be established and stabilized in a Prisoner's dilemma game when agents followed simple rules of self-interest.[19] Axelrod and Hamilton demonstrated that individual agents following a simple rule set of (1) cooperate on the first turn and (2) thereafter replicate the partner's previous action were able to develop "norms" of cooperation and sanctioning in the absence of canonical sociological constructs such as demographics, values, religion, and culture as preconditions or mediators of cooperation.[4] Throughout the 1990s, scholars like William Sims Bainbridge, Kathleen Carley, Michael Macy, and John Skvoretz developed multi-agent-based models of generalized reciprocity, prejudice, social influence, and organizational information processing. In 1999, Nigel Gilbert published the first textbook on Social Simulation: Simulation for the social scientist and established its most relevant journal: the Journal of Artificial Societies and Social Simulation.

Data mining and social network analysis

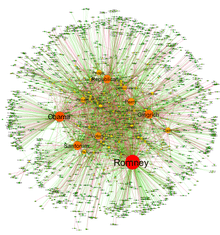

Independent from developments in computational models of social systems, social network analysis emerged in the 1970s and 1980s from advances in graph theory, statistics, and studies of social structure as a distinct analytical method and was articulated and employed by sociologists like James S. Coleman, Harrison White, Linton Freeman, J. Clyde Mitchell, Mark Granovetter, Ronald Burt, and Barry Wellman.[20] The increasing pervasiveness of computing and telecommunication technologies throughout the 1980s and 1990s demanded analytical techniques, such as network analysis and multilevel modeling, that could scale to increasingly complex and large data sets. The most recent wave of computational sociology, rather than employing simulations, uses network analysis and advanced statistical techniques to analyze large-scale computer databases of electronic proxies for behavioral data. Electronic records such as email and instant message records, hyperlinks on the World Wide Web, mobile phone usage, and discussion on Usenet allow social scientists to directly observe and analyze social behavior at multiple points in time and multiple levels of analysis without the constraints of traditional empirical methods such as interviews, participant observation, or survey instruments.[21] Continued improvements in machine learning algorithms likewise have permitted social scientists and entrepreneurs to use novel techniques to identify latent and meaningful patterns of social interaction and evolution in large electronic datasets.[22][23]

The automatic parsing of textual corpora has enabled the extraction of actors and their relational networks on a vast scale, turning textual data into network data. The resulting networks, which can contain thousands of nodes, are then analysed by using tools from Network theory to identify the key actors, the key communities or parties, and general properties such as robustness or structural stability of the overall network, or centrality of certain nodes.[25] This automates the approach introduced by quantitative narrative analysis,[26] whereby subject-verb-object triplets are identified with pairs of actors linked by an action, or pairs formed by actor-object.[24]

Computational content analysis

Content analysis has been a traditional part of social sciences and media studies for a long time. The automation of content analysis has allowed a "big data" revolution to take place in that field, with studies in social media and newspaper content that include millions of news items. Gender bias, readability, content similarity, reader preferences, and even mood have been analyzed based on text mining methods over millions of documents.[27][28][29][30] The analysis of readability, gender bias and topic bias was demonstrated in Flaounas et al.[31] showing how different topics have different gender biases and levels of readability; the possibility to detect mood shifts in a vast population by analysing Twitter content was demonstrated as well.[32]

In 2008, Yukihiko Yoshida did a study called "Leni Riefenstahl and German expressionism: research in Visual Cultural Studies using the trans-disciplinary semantic spaces of specialized dictionaries".[33] The study took databases of images tagged with connotative and denotative keywords (a search engine) and found Riefenstahl's imagery had the same qualities as imagery tagged "degenerate" in the title of the exhibition "Degenerate Art" in Germany at 1937.

Journals and academic publications

The most relevant journal of the discipline is the Journal of Artificial Societies and Social Simulation.

- Complexity Research Journal List, from UIUC, IL

- Related Research Groups, from UIUC, IL

Associations, conferences and workshops

- North American Association for Computational Social and Organization Sciences

- ESSA: European Social Simulation Association

Academic programs, departments and degrees

- University of Bristol "Mediapatterns" project

- Carnegie Mellon University, PhD program in Computation, Organizations and Society (COS)

- George Mason University

- PhD program in CSS (Computational Social Sciences)

- MA program in Master's of Interdisciplinary Studies, CSS emphasis

- Portland State, PhD program in Systems Science

- Portland State, MS program in Systems Science

- UCD, PhD Program in Complex Systems and Computational Social Science (University College Dublin, Ireland)

- UCLA, Minor in Human Complex Systems

- UCLA, Major in Computational & Systems Biology (including behavioral sciences)

- Univ. of Michigan, Minor in Complex Systems

- Systems Sciences Programs List, Portland State. List of other worldwide related programs.

Centers and institutes

USA

- Center for Complex Networks and Systems Research, Indiana University, Bloomington, IN, USA.

- Center for Complex Systems Research, University of Illinois at Urbana-Champaign, IL, USA.

- Center for Social Complexity, George Mason University, Fairfax, VA, USA.

- Center for Social Dynamics and Complexity, Arizona State University, Tempe, AZ, USA.

- Center of the Study of Complex Systems, University of Michigan, Ann Arbor, MI, USA.

- Human Complex Systems, University of California Los Angeles, Los Angeles, CA, USA.

- Institute for Quantitative Social Science, Harvard University, Boston, MA, USA.

- Northwestern Institute on Complex Systems (NICO), Northwestern University, Evanston, IL USA.

- Santa Fe Institute, Santa Fe, NM, USA.

Europe

- Centre for Policy Modelling, Manchester, UK.

- Centre for Research in Social Simulation, University of Surrey, UK.

- Dynamics Lab, Geary Institute, University College Dublin, Dublin, Ireland.

- Groningen Center for Social Complexity Studies (GCSCS), Groningen, NL.

- Chair of Sociology, in particular of Modeling and Simulation (SOMS), Zürich, Switzerland.

- Research Group on Experimental and Computational Sociology (GECS), Brescia, IT.

Asia

- Bandung Fe Institute, Centre for Complexity in Surya University, Bandung, Indonesia.

See also

- Artificial society

- Simulated reality

- Social simulation

- Agent-based social simulation

- Social complexity

- Computational economics

- Computational epidemiology

- Cliodynamics

References

- 1 2 3 Macy, Michael W.; Willer, Robert (2002). "From Factors to Actors: Computational Sociology and Agent-Based Modeling". Annual Review of Sociology. 28: 143–166. doi:10.1146/annurev.soc.28.110601.141117. JSTOR 3069238.

- 1 2 3 4 Gilbert, Nigel; Troitzsch, Klaus (2005). "Simulation and social science". Simulation for Social Scientists (2 ed.). Open University Press.

- ↑ Epstein, Joshua M.; Axtell, Robert (1996). Growing Artificial Societies: Social Science from the Bottom Up. Washington DC: Brookings Institution Press.

- 1 2 Axelrod, Robert (1997). The Complexity of Cooperation: Agent-Based Models of Competition and Collaboration. Princeton, NJ: Princeton University Press.

- ↑ Casti, J (1999). "The Computer as Laboratory: Toward a Theory of Complex Adaptive Systems". Complexity. 4 (5): 12–14. doi:10.1002/(SICI)1099-0526(199905/06)4:5<12::AID-CPLX3>3.0.CO;2-4.

- ↑ Goldspink, C (2002). "Methodological Implications of Complex Systems Approaches to Sociality: Simulation as a Foundation for Knowledge". 5 (1). Journal of Artificial Societies and Social Simulation.

- ↑ Epstein, Joshua (2007). Generative Social Science: Studies in Agent-Based Computational Modeling. Princeton, NJ: Princeton University Press.

- ↑ Durkheim, Émile. The Division of Labor in Society. New York, NY: Macmillan.

- ↑ Bailey, Kenneth D. (2006). "Systems Theory". In Jonathan H. Turner. Handbook of Sociological Theory. New York, NY: Springer Science. pp. 379–404. ISBN 0-387-32458-5.

- ↑ Bainbridge, William Sims (2007). "Computational Sociology". In Ritzer, George. Blackwell Encyclopedia of Sociology. Blackwell Reference Online. doi:10.1111/b.9781405124331.2007.x. ISBN 978-1-4051-2433-1.

- ↑ Crevier, D. (1993). AI: The Tumultuous History of the Search for Artificial Intelligence. New York, NY: Basic Books.

- ↑ Forrester, Jay (1971). World Dynamics. Cambridge, MA: MIT Press.

- ↑ Ignall, Edward J.; Kolesar, Peter; Walker, Warren E. (1978). "Using Simulation to Develop and Validate Analytic Models: Some Case Studies". Operations Research. 26 (2): 237–253. doi:10.1287/opre.26.2.237.

- ↑ Meadows, DL; Behrens, WW; Meadows, DH; Naill, RF; Randers, J; Zahn, EK (1974). The Dynamics of Growth in a Finite World. Cambridge, MA: MIT Press.

- ↑ "Computer View of Disaster Is Rebutted". The New York Times. October 18, 1974.

- ↑ Orcutt, Guy H. (1990). "From engineering to microsimulation : An autobiographical reflection". Journal of Economic Behavior & Organization. 14 (1): 5–27. doi:10.1016/0167-2681(90)90038-F.

- ↑ Toffoli, Tommaso; Margolus, Norman (1987). Cellular automata machines: a new environment for modeling. Cambridge, MA: MIT Press.

- ↑ Gilbert, Nigel (1997). "A simulation of the structure of academic science". Sociological Research Online. 2 (2).

- ↑ Axelrod, Robert; Hamilton, William D. (March 27, 1981). "The Evolution of Cooperation". Science. 211 (4489): 1390–1396. doi:10.1126/science.7466396. PMID 7466396.

- ↑ Freeman, Linton C. (2004). The Development of Social Network Analysis: A Study in the Sociology of Science. Vancouver, BC: Empirical Press.

- ↑ Lazer, David; Pentland, Alex; Adamic, L; Aral, S; Barabasi, AL; Brewer, D; Christakis, N; Contractor, N; et al. (February 6, 2009). "Life in the network: the coming age of computational social science". Science. 323 (5915): 721–723. doi:10.1126/science.1167742. PMC 2745217

. PMID 19197046.

. PMID 19197046. - ↑ Srivastava, Jaideep; Cooley, Robert; Deshpande, Mukund; Tan, Pang-Ning (2000). "Web usage mining: discovery and applications of usage patterns from Web data". Proceedings of the ACM Conference on Knowledge Discovery and Data Mining. 1 (2): 12–23. doi:10.1145/846183.846188.

- ↑ Brin, Sergey; Page, Lawrence (April 1998). "The anatomy of a large-scale hypertextual Web search engine". Computer Networks and ISDN Systems. 30 (1–7): 107–117. doi:10.1016/S0169-7552(98)00110-X.

- 1 2 S Sudhahar; GA Veltri; N Cristianini (2015). "Automated analysis of the US presidential elections using Big Data and network analysis". Big Data & Society. 2 (1): 1–28. doi:10.1177/2053951715572916.

- ↑ S Sudhahar; G De Fazio; R Franzosi; N Cristianini (2013). "Network analysis of narrative content in large corpora". Natural Language Engineering. 21 (1): 1–32. doi:10.1017/S1351324913000247.

- ↑ Roberto Franzosi (2010). Quantitative Narrative Analysis. Emory University.

- ↑ I. Flaounas; M. Turchi; O. Ali; N. Fyson; T. De Bie; N. Mosdell; J. Lewis; N. Cristianini (2010). "The Structure of EU Mediasphere" (PDF). PLoS ONE. 5 (12): e14243. doi:10.1371/journal.pone.0014243.

- ↑ V Lampos; N Cristianini (2012). "Nowcasting Events from the Social Web with Statistical Learning" (PDF). ACM Transactions on Intelligent Systems and Technology (TIST). 3 (4): 72. doi:10.1145/2337542.2337557.

- ↑ I. Flaounas; O. Ali; M. Turchi; T Snowsill; F Nicart; T De Bie; N Cristianini (2011). NOAM: news outlets analysis and monitoring system (PDF). Proc. of the 2011 ACM SIGMOD international conference on Management of data. doi:10.1145/1989323.1989474.

- ↑ N Cristianini (2011). "Automatic discovery of patterns in media content". Combinatorial Pattern Matching. Lecture Notes in Computer Science. 6661. pp. 2–13. doi:10.1007/978-3-642-21458-5_2. ISBN 978-3-642-21457-8.

- ↑ I. Flaounas; O. Ali; M. Turchi; T. Lansdall-Welfare; T. De Bie; N. Mosdell; J. Lewis; N. Cristianini (2012). "Research methods in the age of digital journalism". Digital Journalism. Routledge. doi:10.1080/21670811.2012.714928.

- ↑ T Lansdall-Welfare; V Lampos; N Cristianini. Effects of the Recession on Public Mood in the UK (PDF). Proceedings of the 21st International Conference on World Wide Web. Mining Social Network Dynamics (MSND) session on Social Media Applications. New York, NY, USA. pp. 1221–1226. doi:10.1145/2187980.2188264.

- ↑ Yoshida, Yukihiko (2008). Roy Ascott, ed. "Leni Riefenstahl and German Expressionism: A Study of Visual Cultural Studies Using Transdisciplinary Semantic Space of Specialized Dictionaries". Technoetic Arts: a journal of speculative research. 8 (3). doi:10.1386/tear.6.3.287_1.

External links

- On-line book "Simulation for the Social Scientist" by Nigel Gilbert and Klaus G. Troitzsch, 1999, second edition 2005

- Journal of Artificial Societies and Social Simulation

- Agent based models for social networks, interactive java applets

- Sociology and Complexity Science Website

.svg.png)