Sound localization

Sound localization refers to a listener's ability to identify the location or origin of a detected sound in direction and distance. The sound localization technique appeared with the considerable progress that has been made in acoustic simulation techniques. It allows us to localize the acoustic sources by modeling a sound field that contains one or several sources. Therefore, with such technique, people can obtain the hearing sense from any place in the sound field. It may also refer to the methods in acoustical engineering to simulate the placement of an auditory cue in a virtual 3D space (see binaural recording, wave field synthesis).

The sound localization mechanisms of the mammalian auditory system have been extensively studied. As a new intersect discipline, sound localization involves psychological acoustics, physiological acoustics, artificial intelligence and high performance computing (HPC) system. It has been widely applied on transmitting data, information recognition and improving the immersion feelings, the fidelity and the feeling of reality of 3D environment, which can be implemented both in military[1] and civilian areas.

The auditory system uses several cues for sound source localization, including time- and level-differences (or intensity-difference) between both ears, spectral information, timing analysis, correlation analysis, and pattern matching. These cues are also used by other animals, but there may be differences in usage, and there are also localization cues which are absent in the human auditory system, such as the effects of ear movements. Animals with the ability to localize sound have a clear evolutionary advantage.

How sound reaches the brain

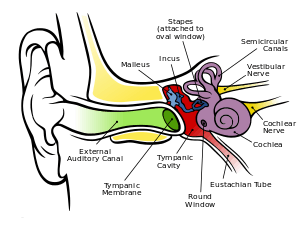

Sound is the perceptual result of mechanical vibrations traveling through a medium such as air or water. Through the mechanisms of compression and rarefaction, sound waves travel through the air, bounce off the pinna and concha of the exterior ear, and enter the ear canal. The sound waves vibrate the tympanic membrane (ear drum), causing the three bones of the middle ear to vibrate, which then sends the energy through the oval window and into the cochlea where it is changed into a chemical signal by hair cells in the organ of corti, which synapse onto spiral ganglion fibers that travel through the cochlear nerve into the brain.

Human Auditory System

Human ear has a very complex physical structure. As shown in fig. 1, it can be divided into three parts: outer, middle and inner.

The outer ear consists of the auricle, external auditory meatus and eardrum. For human adults, the external auditory canal is about 2.7 cm long with a diameter around 0.7 cm. When the external auditory canal is closed, the lowest resonant frequency is around 3060 Hz. Due to the resonance effect in external auditory canal, the sound will gain a 10 dB amplification. The eardrum shapes as a cone about 0.1 mm thick and 69 square mm. With normal voice level, the eardrum displacement is around 0.1 nm. In general, the out ear serves two functions: localizing the sound source and amplifying the acoustic signals.

The middle ear is a chain that consists of three pieces of ossicles: malleus, anvil and stapes. The function of middle ear is to change acoustic impedance and protect inner ear.

The main part of inner ear is cochlea. It is the receiver of human auditory systems that convert the acoustic signals to nerve stimulation.

More detailed information can be found in Ear.

Neural Interactions

In vertebrates, inter-aural time differences are known to be calculated in the superior olivary nucleus of the brainstem. According to Jeffress,[2] this calculation relies on delay lines: neurons in the superior olive which accept innervation from each ear with different connecting axon lengths. Some cells are more directly connected to one ear than the other, thus they are specific for a particular inter-aural time difference. This theory is equivalent to the mathematical procedure of cross-correlation. However, because Jeffress' theory is unable to account for the precedence effect, in which only the first of multiple identical sounds is used to determine the sounds' location (thus avoiding confusion caused by echoes), it cannot be entirely used to explain the response. Furthermore, a number of recent physiological observations made in the midbrain and brainstem of small mammals have shed considerable doubt on the validity of Jeffress' original ideas [3]

Neurons sensitive to inter-aural level differences (ILDs) are excited by stimulation of one ear and inhibited by stimulation of the other ear, such that the response magnitude of the cell depends on the relative strengths of the two inputs, which in turn, depends on the sound intensities at the ears.

In the auditory midbrain nucleus, the inferior colliculus (IC), many ILD sensitive neurons have response functions that decline steeply from maximum to zero spikes as a function of ILD. However, there are also many neurons with much more shallow response functions that do not decline to zero spikes.

The Cone of Confusion

Most mammals are adept at resolving the location of a sound source using interaural time differences and interaural level differences. However, no such time or level differences exist for sounds originating along the circumference of circular conical slices, where the cone's axis lies along the line between the two ears.

Consequently, sound waves originating at any point along a given circumference slant height will have ambiguous perceptual coordinates. That is to say, the listener will be incapable of determining whether the sound originated from the back, front, top, bottom or anywhere else along the circumference at the base of a cone at any given distance from the ear. Of course, the importance of these ambiguities are vanishingly small for sound sources very close to or very far away from the subject, but it is these intermediate distances that are most important in terms of fitness.

These ambiguities can be removed by tilting the head, which can introduce a shift in both the amplitude and phase of sound waves arriving at each ear. This translates the vertical orientation of the interaural axis horizontally, thereby leveraging the mechanism of localization on the horizontal plane. Moreover, even with no alternation in the angle of the interaural axis (i.e. without tilting one's head) the hearing system can capitalize on interference patterns generated by pinnae, the torso, and even the temporary re-purposing of a hand as extension of the pinna (e.g., cupping one's hand around the ear).

As with other sensory stimuli, perceptual disambiguation is also accomplished through integration of multiple sensory inputs, especially visual cues. Having localized a sound within the circumference of a circle at some perceived distance, visual cues serve to fix the location of the sound. Moreover, prior knowledge of the location of the sound generating agent will assist in resolving its current location.

Sound Localization by The Human Auditory System

Sound localization is the process of determining the location of a sound source. Objectively speaking, the major goal of sound localization is to simulate a specific sound field, including the acoustic sources, the listener, the media and environments of sound propagation. The brain utilizes subtle differences in intensity, spectral, and timing cues to allow us to localize sound sources.[4][5] In this section, to more deeply understand the human auditory mechanism, we will briefly discuss about human ear localization theory.

General Introduction

Localization can be described in terms of three-dimensional position: the azimuth or horizontal angle, the elevation or vertical angle, and the distance (for static sounds) or velocity (for moving sounds).[6]

The azimuth of a sound is signaled by the difference in arrival times between the ears, by the relative amplitude of high-frequency sounds (the shadow effect), and by the asymmetrical spectral reflections from various parts of our bodies, including torso, shoulders, and pinnae.[6]

The distance cues are the loss of amplitude, the loss of high frequencies, and the ratio of the direct signal to the reverberated signal.[6]

Depending on where the source is located, our head acts as a barrier to change the timbre, intensity, and spectral qualities of the sound, helping the brain orient where the sound emanated from.[5] These minute differences between the two ears are known as interaural cues.[5]

Lower frequencies, with longer wavelengths, diffract the sound around the head forcing the brain to focus only on the phasing cues from the source.[5]

Helmut Haas discovered that we can discern the sound source despite additional reflections at 10 decibels louder than the original wave front, using the earliest arriving wave front.[5] This principle is known as the Haas effect, a specific version of the precedence effect.[5] Haas measured down to even a 1 millisecond difference in timing between the original sound and reflected sound increased the spaciousness, allowing the brain to discern the true location of the original sound. The nervous system combines all early reflections into a single perceptual whole allowing the brain to process multiple different sounds at once.[7] The nervous system will combine reflections that are within about 35 milliseconds of each other and that have a similar intensity.[7]

Duplex Theory

To determine the lateral input direction (left, front, right), the auditory system analyzes the following ear signal information:

Duplex Theory

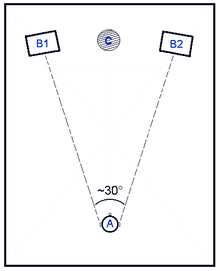

In 1907, Lord Rayleigh utilized tuning forks to generate monophonic excitation and studied the lateral sound localization theory on a human head model without auricle. He first presented the interural clue difference based sound localization theory, which is called as Duplex Theory.[8] Human ears are on the different sides of the head, thus they have different coordinates in space. As shown in fig. 2, since the distances between the acoustic source and ears are different, there are time difference and intensity difference between the sound signals of two ears. We call those kinds of differences as Interaural Time Difference (ITD) and Interaural Intensity Difference (IID) respectively.

ITD and IID

_entre_izquierdo_(inferior)_y_correcto_(superior)_orejas.jpg)

[sound source: 100 ms white noise from right]

[sound source: a sweep from right]

From fig.2 we can see that no matter for source B1 or source B2, there will be a propagation delay between two ears, which will generate the ITD. Simultaneously, human head and ears may have shadowing effect on high frequency signals, which will generate IID.

- Interaural Time Difference (ITD) Sound from the right side reaches the right ear earlier than the left ear. The auditory system evaluates interaural time differences from: (a) Phase delays at low frequencies and (b) group delays at high frequencies.

- Massive experiments demonstrate that ITD relates to the signal frequency f. Suppose the angular position of the acoustic source is θ, the head radius is r and the acoustic velocity is c, the function of ITD is given by:[9]. In above closed form, we assumed that the 0 degree is in the right ahead of the head and counter-clockwise is positive.

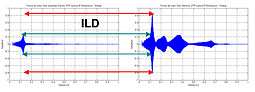

- Interaural Intensity Difference (IID) or Interaural Level Difference (ILD) Sound from the right side has a higher level at the right ear than at the left ear, because the head shadows the left ear. These level differences are highly frequency dependent and they increase with increasing frequency. Massive theoretical researches demonstrate that IID relates to the signal frequency f and the angular position of the acoustic source θ. The function of IID is given by:[9]

- For frequencies below 1000 Hz, mainly ITDs are evaluated (phase delays), for frequencies above 1500 Hz mainly IIDs are evaluated. Between 1000 Hz and 1500 Hz there is a transition zone, where both mechanisms play a role.

- Localization accuracy is 1 degree for sources in front of the listener and 15 degrees for sources to the sides. Humans can discern interaural time differences of 10 microseconds or less.[10][11]

Evaluation for low frequencies

For frequencies below 800 Hz, the dimensions of the head (ear distance 21.5 cm, corresponding to an interaural time delay of 625 µs) are smaller than the half wavelength of the sound waves. So the auditory system can determine phase delays between both ears without confusion. Interaural level differences are very low in this frequency range, especially below about 200 Hz, so a precise evaluation of the input direction is nearly impossible on the basis of level differences alone. As the frequency drops below 80 Hz it becomes difficult or impossible to use either time difference or level difference to determine a sound's lateral source, because the phase difference between the ears becomes too small for a directional evaluation.[12]

Evaluation for high frequencies

For frequencies above 1600 Hz the dimensions of the head are greater than the length of the sound waves. An unambiguous determination of the input direction based on interaural phase alone is not possible at these frequencies. However, the interaural level differences become larger, and these level differences are evaluated by the auditory system. Also, group delays between the ears can be evaluated, and is more pronounced at higher frequencies; that is, if there is a sound onset, the delay of this onset between the ears can be used to determine the input direction of the corresponding sound source. This mechanism becomes especially important in reverberant environments. After a sound onset there is a short time frame where the direct sound reaches the ears, but not yet the reflected sound. The auditory system uses this short time frame for evaluating the sound source direction, and keeps this detected direction as long as reflections and reverberation prevent an unambiguous direction estimation.[13] The mechanisms described above cannot be used to differentiate between a sound source ahead of the hearer or behind the hearer; therefore additional cues have to be evaluated.[14]

Pinna Filtering Effect Theory

Motivations

Duplex theory clearly points out that ITD and IID play significant roles in sound localization but they can only deal with lateral localizing problems. For example, based on duplex theory, if two acoustic sources are symmetrically located on the right front and right back of the human head, they will generate equal ITDs and IIDs, which is called as cone model effect. However, human ears can actually distinguish this set of sources. Besides that, in natural sense of hearing, only one ear, which means no ITD or IID, can distinguish the sources with a high accuracy. Due to the disadvantages of duplex theory, researchers proposed the pinna filtering effect theory.[15] The shape of human pinna is very special. It is concave with complex folds and asymmetrical no matter horizontally or vertically. The reflected waves and the direct waves will generate a frequency spectrum on the eardrum, which is related to the acoustic sources. Then auditory nerves localize the sources by this frequency spectrum. Therefore, a corresponding theory was proposed and called as pinna filtering effect theory.[16]

Math Model

These spectrum clue generated by pinna filtering effect can be presented as Head-Related Transfer Functions (HRTF). The corresponding time domain expressions are called as Head-Related Impulse Response (HRIR). HRTF is also called as the transfer function from the free field to a specific point in the ear canal. We usually recognize HRTFs as LTI systems:[9]

,

where L and R represent the left ear and right ear respectively. and represent the amplitude of sound pressure at entrances of left and right ear canal. is the amplitude of sound pressure at the center of the head coordinate when listener does not exist. In general, HRTFs and are functions of source angular position , elevation angle , distance between source and center of the head , the angular velocity and the equivalent dimension of the head .

HRTF Database

At present, the main institutes that work on measuring HRTF database includes CIPIC[17] International Lab, MIT Media Lab, The Graduate School in Psychoacoustics at the University of Oldenburg, Neurophysiology Lab in University of Wisconsin-Madison and Ames Lab of NASA. They carefully measures the HRIRs from both humans and animals and share the results on Internet for people who want to study.

Other Cues for 3D Space Localization

Monaural cues

The human outer ear, i.e. the structures of the pinna and the external ear canal, form direction-selective filters. Depending on the sound input direction in the median plane, different filter resonances become active. These resonances implant direction-specific patterns into the frequency responses of the ears, which can be evaluated by the auditory system (directional bands) for vertical sound localization. Together with other direction-selective reflections at the head, shoulders and torso, they form the outer ear transfer functions. These patterns in the ear's frequency responses are highly individual, depending on the shape and size of the outer ear. If sound is presented through headphones, and has been recorded via another head with different-shaped outer ear surfaces, the directional patterns differ from the listener's own, and problems will appear when trying to evaluate directions in the median plane with these foreign ears. As a consequence, front–back permutations or inside-the-head-localization can appear when listening to dummy head recordings, or otherwise referred to as binaural recordings. It has been shown that human subjects can monaurally localize high frequency sound but not low frequency sound. Binaural localization, however, was possible with lower frequencies. This is likely due to the pinna being small enough to only interact with sound waves of high frequency.[18] It seems that people can only accurately localize the elevation of sounds that are complex and include frequencies above 7,000 Hz, and a pinna must be present.[19]

Dynamic binaural cues

When the head is stationary, the binaural cues for lateral sound localization (interaural time difference and interaural level difference) do not give information about the location of a sound in the median plane. Identical ITDs and ILDs can be produced by sounds at eye level or at any elevation, as long as the lateral direction is constant. However, if the head is rotated, the ITD and ILD change dynamically, and those changes are different for sounds at different elevations. For example, if an eye-level sound source is straight ahead and the head turns to the left, the sound becomes louder (and arrives sooner) at the right ear than at the left. But if the sound source is directly overhead, there will be no change in the ITD and ILD as the head turns. Intermediate elevations will produce intermediate degrees of change, and if the presentation of binaural cues to the two ears during head movement is reversed, the sound will be heard behind the listener.[14][20] Hans Wallach[21] artificially altered a sound’s binaural cues during movements of the head. Although the sound was objectively placed at eye level, the dynamic changes to ITD and ILD as the head rotated were those that would be produced if the sound source had been elevated. In this situation, the sound was heard at the synthesized elevation. The fact that the sound sources objectively remained at eye level prevented monaural cues from specifying the elevation, showing that it was the dynamic change in the binaural cues during head movement that allowed the sound to be correctly localized in the vertical dimension. The head movements need not be actively produced; accurate vertical localization occurred in a similar setup when the head rotation was produced passively, by seating the blindfolded subject in a rotating chair. As long as the dynamic changes in binaural cues accompanied a perceived head rotation, the synthesized elevation was perceived.[14]

Distance of the sound source

The human auditory system has only limited possibilities to determine the distance of a sound source. In the close-up-range there are some indications for distance determination, such as extreme level differences (e.g. when whispering into one ear) or specific pinna (the visible part of the ear) resonances in the close-up range.

The auditory system uses these clues to estimate the distance to a sound source:

- Direct/ Reflection ratio: In enclosed rooms, two types of sound are arriving at a listener: The direct sound arrives at the listener's ears without being reflected at a wall. Reflected sound has been reflected at least one time at a wall before arriving at the listener. The ratio between direct sound and reflected sound can give an indication about the distance of the sound source.

- Loudness: Distant sound sources have a lower loudness than close ones. This aspect can be evaluated especially for well-known sound sources.

- Sound spectrum : High frequencies are more quickly damped by the air than low frequencies. Therefore, a distant sound source sounds more muffled than a close one, because the high frequencies are attenuated. For sound with a known spectrum (e.g. speech) the distance can be estimated roughly with the help of the perceived sound.

- ITDG: The Initial Time Delay Gap describes the time difference between arrival of the direct wave and first strong reflection at the listener. Nearby sources create a relatively large ITDG, with the first reflections having a longer path to take, possibly many times longer. When the source is far away, the direct and the reflected sound waves have similar path lengths.

- Movement: Similar to the visual system there is also the phenomenon of motion parallax in acoustical perception. For a moving listener nearby sound sources are passing faster than distant sound sources.

- Level Difference: Very close sound sources cause a different level between the ears.

Signal processing

Sound processing of the human auditory system is performed in so-called critical bands. The hearing range is segmented into 24 critical bands, each with a width of 1 Bark or 100 Mel. For a directional analysis the signals inside the critical band are analyzed together.

The auditory system can extract the sound of a desired sound source out of interfering noise. So the auditory system can concentrate on only one speaker if other speakers are also talking (the cocktail party effect). With the help of the cocktail party effect sound from interfering directions is perceived attenuated compared to the sound from the desired direction. The auditory system can increase the signal-to-noise ratio by up to 15 dB, which means that interfering sound is perceived to be attenuated to half (or less) of its actual loudness.

Localization in enclosed rooms

In enclosed rooms not only the direct sound from a sound source is arriving at the listener's ears, but also sound which has been reflected at the walls. The auditory system analyses only the direct sound,[13] which is arriving first, for sound localization, but not the reflected sound, which is arriving later (law of the first wave front). So sound localization remains possible even in an echoic environment. This echo cancellation occurs in the Dorsal Nucleus of the Lateral Lemniscus (DNLL).

In order to determine the time periods, where the direct sound prevails and which can be used for directional evaluation, the auditory system analyzes loudness changes in different critical bands and also the stability of the perceived direction. If there is a strong attack of the loudness in several critical bands and if the perceived direction is stable, this attack is in all probability caused by the direct sound of a sound source, which is entering newly or which is changing its signal characteristics. This short time period is used by the auditory system for directional and loudness analysis of this sound. When reflections arrive a little bit later, they do not enhance the loudness inside the critical bands in such a strong way, but the directional cues become unstable, because there is a mix of sound of several reflection directions. As a result, no new directional analysis is triggered by the auditory system.

This first detected direction from the direct sound is taken as the found sound source direction, until other strong loudness attacks, combined with stable directional information, indicate that a new directional analysis is possible. (see Franssen effect)

Specific Sound Localization Techniques with Applications

Auditory Transmission Stereo System[22]

This kind of sound localization technique provides us the real virtual stereo system. It utilizes "smart" manikins, such as KEMAR, to glean signals or use DSP methods to simulate the transmission process from sources to ears. After amplifying, recording and transmitting, the two channels of received signals will be reproduced through earphones or speakers. This localization approach uses electroacoustic methods to obtain the spatial information of the original sound field by transferring the listener's auditory apparatus to the original sound field. The most considerable advantages of it would be that its acoustic images are lively and natural. Also, it only needs two independent transmitted signal to reproduce the acoustic image of a 3D system.

3D Para-virtualization Stereo System[22]

The representatives of this this kind of system are SRS Audio Sandbox, Spatializer Audio Lab and Qsound Qxpander. They use HRTF to simulate the received acoustic signals at the ears from different directions with common binary-channel stereo reproduction. Therefore, they can simulate reflected sound waves and improve subjective sense of space and envelopment. Since they are para-virtualization stereo systems, the major goal of them is to simulate stereo sound information. Traditional stereo systems use sensors that are quite different from human ears. Although those sensors can receive the acoustic information from different directions, they do not have same the frequency response of human auditory system. Therefore, when binary-channel mode is applied, human auditory systems still cannot feel the 3D sound effect field. However, the 3D para-virtualization stereo system overcome such disadvantages. It uses HRTF principles to glean acoustic information from the original sound field then produce a lively 3D sound field through common earphones or speakers.

Multichannel Stereo Virtual Reproduction[22]

Since the multichannel stereo systems require lots of reproduction channels, some researchers adopted the HRTF simulation technologies to reduce the number of reproduction channels. They use only two speakers to simulate multiple speakers in a multichannel system. This process is called as virtual reproduction. Essentially, such approach uses both interaural difference principle and pinna filtering effect theory. Unfortunately, this kind of approach cannot perfectly substitute the traditional multichannel stereo system, such as 5.1 surround sound system. That is because when the listening zone is relatively larger, simulation reproduction through HRTFs may cause invert acoustic images at symmetric positions.

Animals

Since most animals have two ears, many of the effects of the human auditory system can also be found in other animals. Therefore, interaural time differences (interaural phase differences) and interaural level differences play a role for the hearing of many animals. But the influences on localization of these effects are dependent on head sizes, ear distances, the ear positions and the orientation of the ears.

Lateral information (left, ahead, right)

If the ears are located at the side of the head, similar lateral localization cues as for the human auditory system can be used. This means: evaluation of interaural time differences (interaural phase differences) for lower frequencies and evaluation of interaural level differences for higher frequencies. The evaluation of interaural phase differences is useful, as long as it gives unambiguous results. This is the case, as long as ear distance is smaller than half the length (maximal one wavelength) of the sound waves. For animals with a larger head than humans the evaluation range for interaural phase differences is shifted towards lower frequencies, for animals with a smaller head, this range is shifted towards higher frequencies.

The lowest frequency which can be localized depends on the ear distance. Animals with a greater ear distance can localize lower frequencies than humans can. For animals with a smaller ear distance the lowest localizable frequency is higher than for humans.

If the ears are located at the side of the head, interaural level differences appear for higher frequencies and can be evaluated for localization tasks. For animals with ears at the top of the head, no shadowing by the head will appear and therefore there will be much less interaural level differences, which could be evaluated. Many of these animals can move their ears, and these ear movements can be used as a lateral localization cue.

Sound localization by odontocetes

Dolphins (and other odontocetes) rely on echolocation to aid in detecting, identifying, localizing, and capturing prey. Dolphin sonar signals are well suited for localizing multiple, small targets in a three‐dimensional aquatic environment by utilizing highly directional (3 dB beamwidth of about 10 deg), broadband (3 dB bandwidth typically of about 40 kHz; peak frequencies between 40 kHz and 120 kHz), short duration clicks (about 40 μs). Dolphins can localize sounds both passively and actively (echolocation) with a resolution of about 1 deg. Cross‐modal matching (between vision and echolocation) suggests dolphins perceive the spatial structure of complex objects interrogated through echolocation, a feat that likely requires spatially resolving individual object features and integration into a holistic representation of object shape. Although dolphins are sensitive to small, binaural intensity and time differences, mounting evidence suggests dolphins employ position‐dependent spectral cues derived from well developed head‐related transfer functions, for sound localization in both the horizontal and vertical planes. A very small temporal integration time (264 μs) allows localization of multiple targets at varying distances. Localization adaptations include pronounced asymmetry of the skull, nasal sacks, and specialized lipid structures in the forehead and jaws, as well as acoustically isolated middle and inner ears.

Sound localization in the median plane (front, above, back, below)

For many mammals there are also pronounced structures in the pinna near the entry of the ear canal. As a consequence, direction-dependent resonances can appear, which could be used as an additional localization cue, similar to the localization in the median plane in the human auditory system. There are additional localization cues which are also used by animals.

Head tilting

For sound localization in the median plane (elevation of the sound) also two detectors can be used, which are positioned at different heights. In animals, however, rough elevation information is gained simply by tilting the head, provided that the sound lasts long enough to complete the movement. This explains the innate behavior of cocking the head to one side when trying to localize a sound precisely. To get instantaneous localization in more than two dimensions from time-difference or amplitude-difference cues requires more than two detectors.

Localization with coupled ears (flies)

The tiny parasitic fly Ormia ochracea has become a model organism in sound localization experiments because of its unique ear. The animal is too small for the time difference of sound arriving at the two ears to be calculated in the usual way, yet it can determine the direction of sound sources with exquisite precision. The tympanic membranes of opposite ears are directly connected mechanically, allowing resolution of sub-microsecond time differences[23][24] and requiring a new neural coding strategy.[25] Ho[26] showed that the coupled-eardrum system in frogs can produce increased interaural vibration disparities when only small arrival time and sound level differences were available to the animal’s head. Efforts to build directional microphones based on the coupled-eardrum structure are underway.

Bi-coordinate sound localization (owls)

Most owls are nocturnal or crepuscular birds of prey. Because they hunt at night, they must rely on non-visual senses. Experiments by Roger Payne[27] have shown that owls are sensitive to the sounds made by their prey, not the heat or the smell. In fact, the sound cues are both necessary and sufficient for localization of mice from a distant location where they are perched. For this to work, the owls must be able to accurately localize both the azimuth and the elevation of the sound source.

See also

- Acoustic location

- Animal echolocation

- Binaural fusion

- Coincidence detection in neurobiology

- Human echolocation

- Perceptual-based 3D sound localization

- Psychoacoustics

- Spatial hearing loss

References

- ↑ McKinley R L, Ericson M A. Flight demonstration of a 3-D auditory display[J]. 1997.

- ↑ Jeffress L.A. (1948). "A place theory of sound localization". Journal of Comparative and Physiological Psychology. 41: 35–39. doi:10.1037/h0061495. PMID 18904764.

- ↑ Schnupp J., Nelken I & King A.J., 2011. Auditory Neuroscience, MIT Press, chapter 5.

- ↑ Blauert, J.: Spatial hearing: the psychophysics of human sound localization; MIT Press; Cambridge, Massachusetts (1983)

- 1 2 3 4 5 6 Thompson, Daniel M. Understanding Audio: Getting the Most out of Your Project or Professional Recording Studio. Boston, MA: Berklee, 2005. Print.

- 1 2 3 Roads, Curtis. The Computer Music Tutorial. Cambridge, MA: MIT, 2007. Print.

- 1 2 Benade, Arthur H. Fundamentals of Musical Acoustics. New York: Oxford UP, 1976. Print.

- ↑ Rayleigh L. XII. On our perception of sound direction[J]. The London, Edinburgh, and Dublin Philosophical Magazine and Journal of Science, 1907, 13(74): 214-232.

- 1 2 3 Zhou X. Virtual reality technique[J]. Telecommunications Science, 1996, 12(7): 46-50.

- ↑ Ian Pitt. "Auditory Perception". Archived from the original on 2010-04-10.

- ↑ DeLiang Wang; Guy J. Brown (2006). Computational auditory scene analysis: principles, algorithms and applications. Wiley interscience. ISBN 9780471741091.

For sinusoidal signals presented on the horizontal plane, spatial resolution is highest for sounds coming from the median plane (directly in front of the listener) with about 1 degree MAA, and it deteriorates markedly when stimuli are moved to the side – e.g., the MAA is about 7 degrees for sounds originating at 75 degrees to the side.

- ↑ http://acousticslab.org/psychoacoustics/PMFiles/Module07a.htm

- 1 2 Wallach,, H; Newman, E.B.; Rosenzweig, M.R. (July 1949). "The precedence effect in sound localization". American Journal of Psychology. 62 (3): 315–336. doi:10.2307/1418275.

- 1 2 3 Wallach, Hans (October 1940). "The role of head movements and vestibular and visual cues in sound localization". Journal of Experimental Psychology. 27 (4): 339–368. doi:10.1037/h0054629.

- ↑ Batteau D W. The role of the pinna in human localization[J]. Proceedings of the Royal Society of London B: Biological Sciences, 1967, 168(1011): 158-180.

- ↑ Musicant A D, Butler R A. The influence of pinnae‐based spectral cues on sound localization[J]. The Journal of the Acoustical Society of America, 1984, 75(4): 1195-1200.

- ↑ "The CIPIC HRTF Database".

- ↑ Robert A. BUTLER; Richard A. HUMANSKI (1992). "Localization of sound in the vertical plane with and without high-frequency spectral cues" (PDF). Perception & Psychophysics. 51 (2): 182–186. doi:10.3758/bf03212242.

- ↑ Roffler Suzanne K.; Butler Robert A. (1968). "Factors That Influence the Localization of Sound in the Vertical Plane". J. Acoust. Soc. Am. 43 (6): 1255–1259. doi:10.1121/1.1910976.

- ↑ Thurlow, W.R. "Audition" in Kling, J.W. & Riggs, L.A., Experimental Psychology, 3rd edition, Holt Rinehart & Winston, 1971, pp. 267–268.

- ↑ Wallach, H (1939). "On sound localization". Journal of the Acoustical Society of America. 10 (4): 270–274. doi:10.1121/1.1915985.

- 1 2 3 Zhao R. Study of Auditory Transmission Sound Localization System[D], University of Science and Technology of China, 2006.

- ↑ Miles RN, Robert D, Hoy RR (Dec 1995). "Mechanically coupled ears for directional hearing in the parasitoid fly Ormia ochracea". J Acoust Soc Am. 98 (6): 3059–70. doi:10.1121/1.413830. PMID 8550933.

- ↑ Robert D, Miles RN, Hoy RR (1996). "Directional hearing by mechanical coupling in the parasitoid fly Ormia ochracea". J Comp Physiol [A]. 179 (1): 29–44. doi:10.1007/BF00193432. PMID 8965258.

- ↑ Mason AC, Oshinsky ML, Hoy RR (Apr 2001). "Hyperacute directional hearing in a microscale auditory system". Nature. 410 (6829): 686–90. doi:10.1038/35070564. PMID 11287954.

- ↑ Ho CC, Narins PM (Apr 2006). "Directionality of the pressure-difference receiver ears in the northern leopard frog, Rana pipiens pipiens". J Comp Physiol [A]. 192 (4): 417–29. doi:10.1007/s00359-005-0080-7.

- ↑ Payne, Roger S., 1962. How the Barn Owl Locates Prey by Hearing. The Living Bird, First Annual of the Cornell Laboratory of Ornithology, 151-159

External links

- auditoryneuroscience.com: Collection of multimedia files and flash demonstrations related to spatial hearing

- Collection of references about sound localization

- Scientific articles about the sound localization abilities of different species of mammals

- Interaural Intensity Difference Processing in Auditory Midbrain Neurons: Effects of a Transient Early Inhibitory Input

- Online learning center - Hearing and Listening

- HearCom:Hearing in the Communication Society, an EU research project

- Research on "Non-line-of-sight (NLOS) Localisation for Indoor Environments" by CMR at UNSW

- An introduction to sound localization

- Sound and Room

- An introduction to acoustic holography

- An introduction to acoustic beamforming